AI Insight Engine: Operational Institutional Memory

Advanced KG-RAG system that automatically generates Knowledge Graphs from unstructured documents, then provides verifiable answers grounded in organizational expertise not generic LLM responses.

The Problem

The Fidelity Challenge

Generic AI defaults to "wisdom of the crowds" useful for general knowledge, problematic for organizations that need answers grounded in their specific, hard-won expertise.

Traditional search requires knowing what to look for. When institutional knowledge is fragmented across hundreds of documents, even experienced staff struggle to find context quickly and new hires have no starting point.

The Solution

Automated Knowledge Graph + KG-RAG

The Insight Engine uses a multi-stage AI pipeline to automatically generate a structured Knowledge Graph from unstructured data, then integrates that KG with RAG retrieval (KG-RAG).

The KG-RAG Advantage

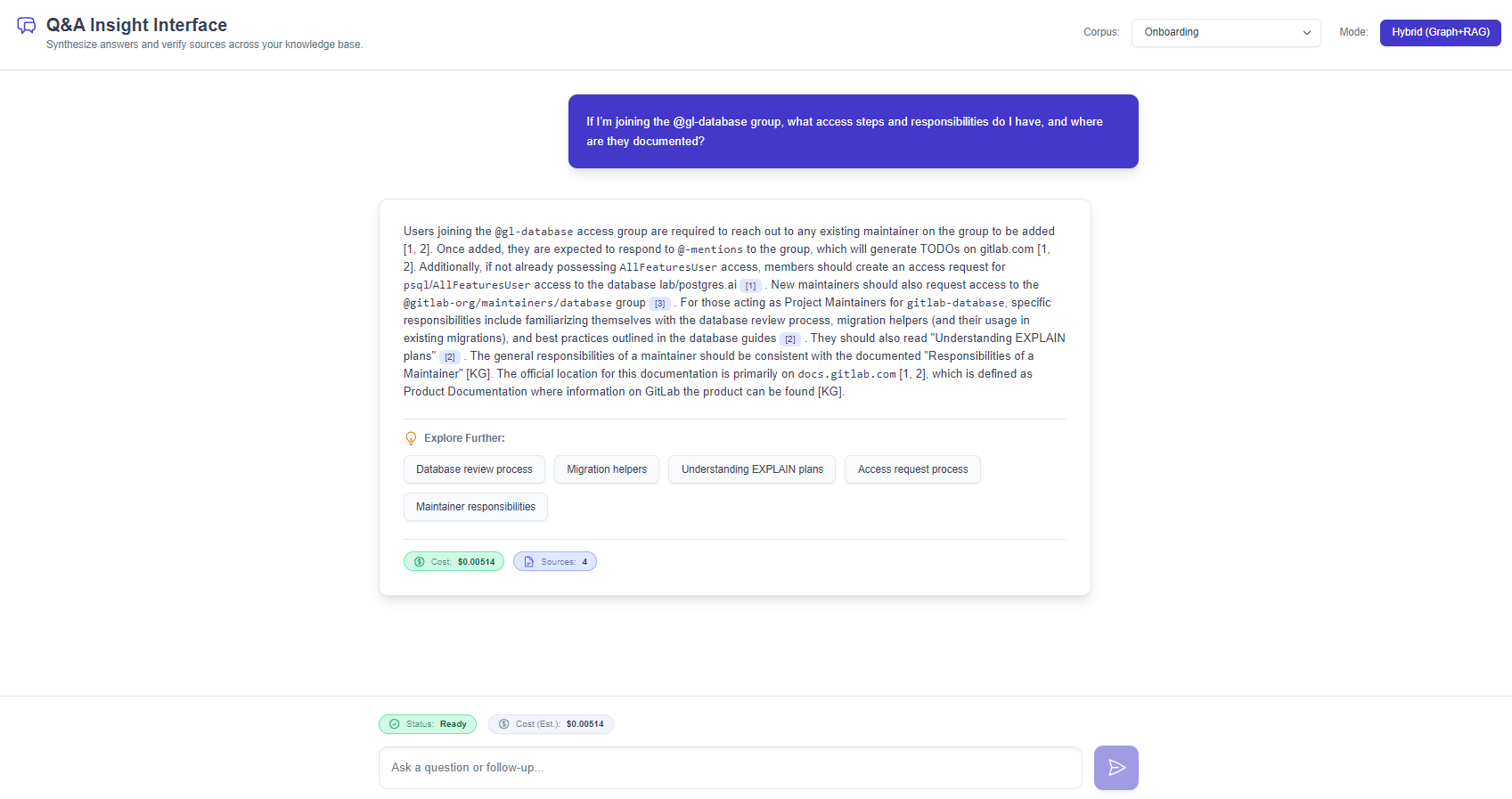

By grounding answers in the Knowledge Graph, the system ensures fidelity to organizational expertise. It provides verifiable citations, enables complex multi-hop reasoning, and delivers deeply contextualized answers creating true institutional memory.

Project Overview

Business Value

Transforms scattered documents into dynamic knowledge systems. Drastically reduces onboarding time, ensures organizational resilience, provides auditability, and scales expertise without proportional headcount.

My Role

Sole architect. Owned vision and system design driven by firsthand knowledge management challenges. Conducted R&D in AI methodologies, KG architecture, and RAG. Validated core architecture and features, demonstrating technical feasibility for production scaling.

Key Innovation

Automatic Knowledge Graph generation from raw data, enabling sophisticated KG-RAG at scale (100,000+ nodes) while ensuring fidelity to source material.

Architecture Components

Interface Showcase

Visual overview of the Insight Engine's core interfaces.

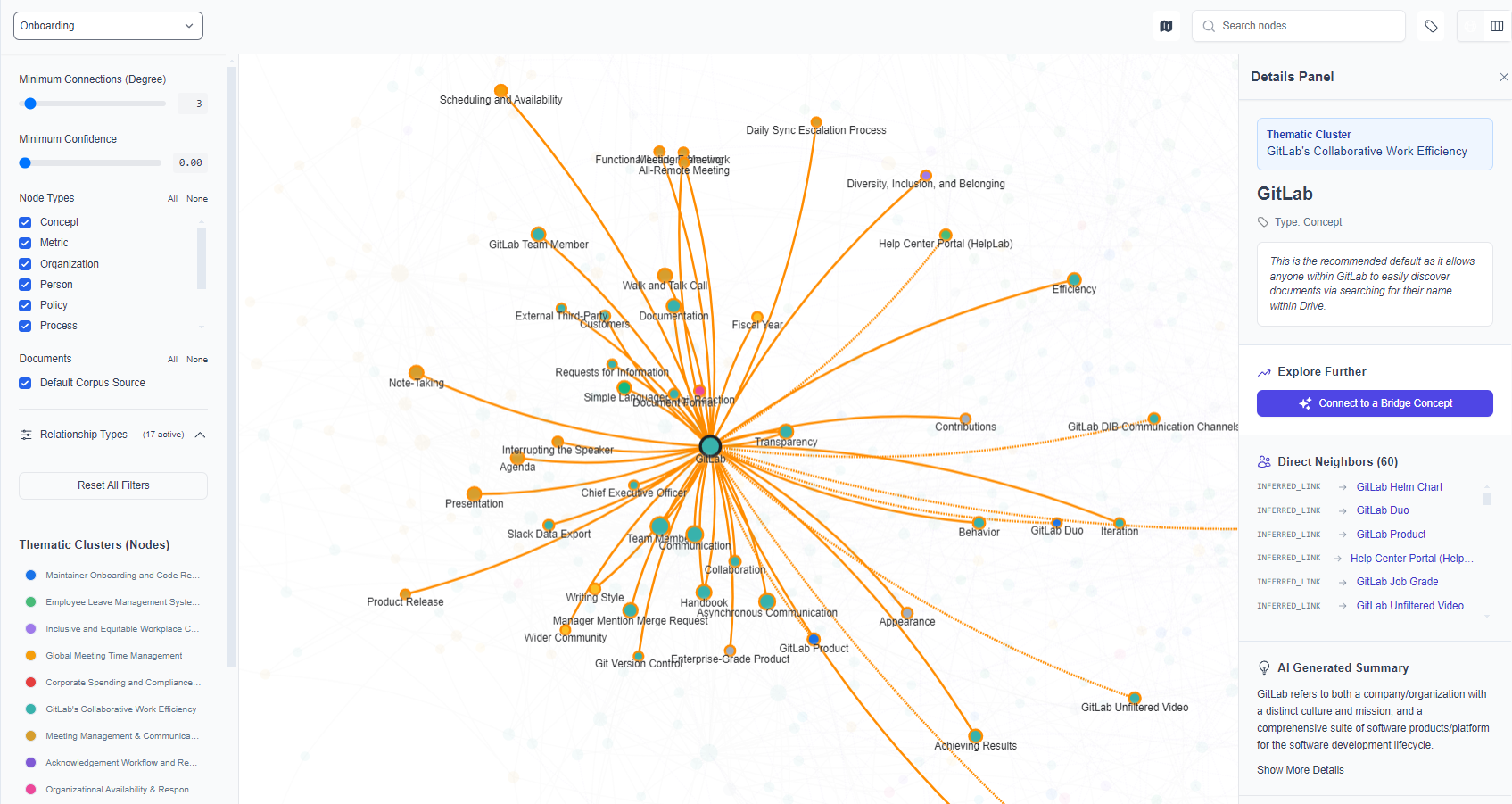

1. Knowledge Graph Explorer

Visualize and interact with the automatically generated knowledge graph, exploring relationships between concepts.

2. KG-RAG Q&A Interface

Ask complex questions and receive synthesized answers grounded in the knowledge graph, with precise citations.

3. Thematic Clustering

High-level corpus overview using ML (UMAP/DBSCAN) to cluster concepts and identify major themes automatically.

Value Proposition

Use Cases

Validated across finance (due diligence), academia (research synthesis), and property management (regulatory compliance).

Accelerated Onboarding

Dramatically reduce time-to-competency by providing instant access to structured, contextualized knowledge.

- Instant, accurate answers from entire knowledge base

- Visual KG maps showing concept relationships

- Reduced dependency on senior staff availability

Advanced Research & Discovery

Empower researchers to uncover hidden connections and synthesize insights across thousands of documents.

- "Global Brain" identifies cross-document relationships

- Thematic clustering reveals major topic areas

- Multi-hop reasoning enables complex queries

Reliable AI with Auditability

Provide foundational guidelines and memory for consistent, trustworthy AI assistants.

- KG acts as structured ground truth

- Precise citations enable verification

- Reduced hallucinations through constrained retrieval

Dynamic Knowledge Management

Transform static repositories into living knowledge bases that evolve automatically.

- Auto-generates KG from unstructured data

- Visual analysis reveals knowledge gaps

- Continuous evolution as documents are added

Development Status

Architectural Validation, Not Production Deployment

This system represents architectural validation. It proves the approach works automated KG generation + KG-RAG solves real problems at scale.

Production deployment requires additional engineering (DevOps pipelines, monitoring, security audits, compliance certifications) work that's outside the scope of architectural R&D.

What the Prototype Proves

The hard problems are solved: automated knowledge extraction, entity resolution at scale, cross-document synthesis, efficient graph traversal. The architecture is sound.

What remains is engineering execution a resource question, not a conceptual one. For organizations evaluating this approach, the prototype demonstrates technical justification.

Technical Design

Architecture for Scale

Designed to scale to 100,000+ nodes while remaining interactive solving the "hairball problem."

Fluid Interactivity

Heavy computations offloaded to Web Workers, ensuring responsive UI even with massive datasets.

Value: Seamless exploration of large knowledge graphs without UI freezing.

Instant Search

Virtualization (react-window) handles display and search across all 100k+ nodes.

Value: Instant results regardless of data size.

Automated Discovery

Python ML (UMAP/DBSCAN) automatically clusters related concepts, generating high-level "Conceptual Maps."

Value: Instant corpus overview with AI-labeled topics.

System Architecture Diagram

Innovation Spotlight

The "Global Brain" Feature

Automated Insight Discovery

The Global Brain proactively automates insight discovery, running after document processing to build connections between them turning siloed documents into integrated knowledge.

The Impact

This transforms the system from search engine to research partner. When querying, the AI traverses inferred "bridge" edges, automatically synthesizing context from multiple documents revealing insights that would take weeks to find manually.

Interested in Learning More?

This project demonstrates capacity for architecting complex, data-intensive AI applications. Questions about the architecture or potential opportunities?